A recent paper, published in Nature, explores a critical issue facing generative AI models, termed "model collapse," which occurs when models are trained on data generated by earlier models, rather than genuine human-generated content. This process leads to a gradual and irreversible degradation in model performance, in which the models lose their ability to accurately represent the true underlying data distribution. This phenomenon is of particular concern as large language models (LLMs) and other generative models increasingly contribute to the content found on the internet, raising the risk that future models will be trained on increasingly synthetic data, rather than data rooted in real-world human behaviour.

The paper raises some important questions that we must ask ourselves.

Why Does Model Collapse Happen?

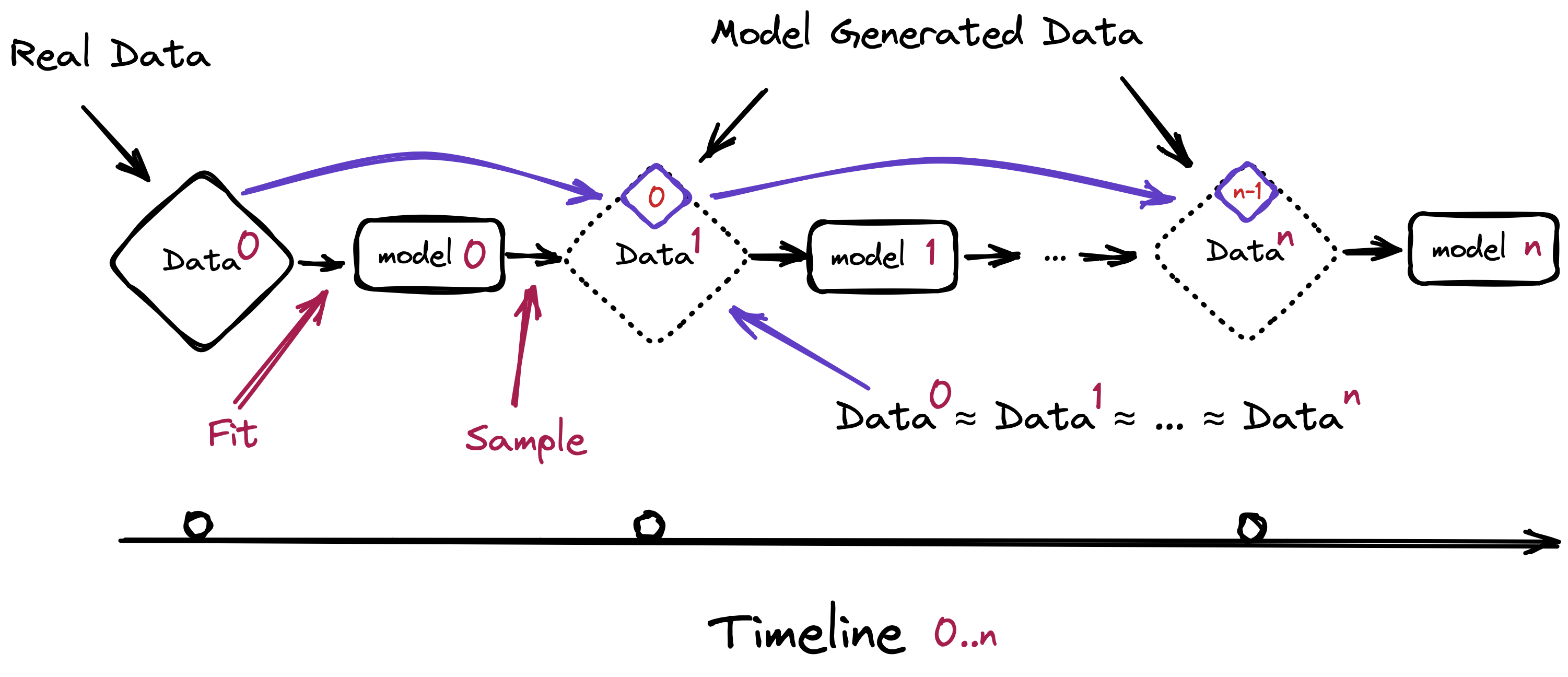

Consider a generative “model 0” (e.g. an LLM) trained on dataset “data 0” (e.g. content scraped from the internet), which produces some output. At some later point in time, another “model 1” is to be trained on some larger dataset “data 1”, which has been freshly scraped from the internet. The issue is that the outputs from model 0 will have polluted data 1, to the point that the major part of data 1 is actually generated content.

As this process recurs iteratively over future generations of model “n”, the effect compounds, as this diagram by the authors shows:

The problems start because, as the authors empirically demonstrate, models trained on synthetic data do a worse job of representing the real world than models trained on authentic data. Over time, this effect compounds and leads to models totally divorced from reality.

The paper identifies two stages of model collapse: “early model collapse”, in which rare events are underestimated, and “late model collapse”, where the distribution converges to a state that no longer resembles the original data, often with significantly reduced variance. This issue is shown to be universal across various model types, including LLMs, variational autoencoders (VAEs), and Gaussian mixture models (GMMs).

How Does It Happen?

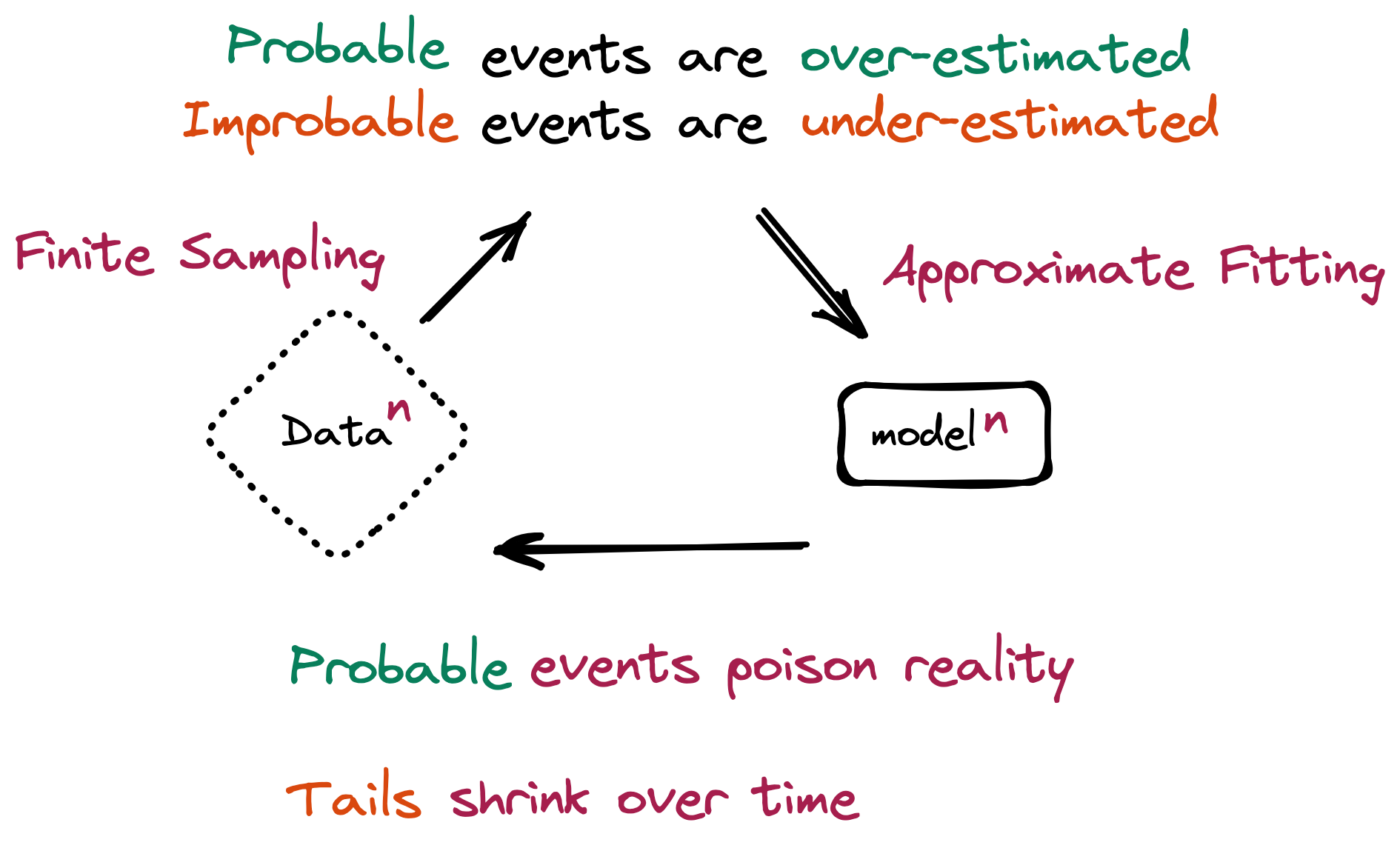

The authors outline three primary sources of error that contribute to the phenomenon:

- Statistical Approximation Error: Occurs due to the finite number of samples available, leading to the loss of rare events.

- Functional Expressivity Error: Arises when the model's architecture is not capable of fully capturing the original data's complexity, such as attempting to fit a complex distribution with a simpler one.

- Functional Approximation Error: Results from limitations in the training process, including biases inherent in optimization algorithms or choice of training objectives.

The combined effect of these three sources of error is that models tend to overestimate the likelihood of common occurrences in the data, and underestimate the “tails” of less common occurrences. This leads to a negative feedback loop in which the worldview of the model is narrowed over time:

These errors accumulate over generations of model training, leading to increasing divergence from the true data distribution and the eventual collapse of the model's capacity to generalise.

How Can We Counter It?

To counter this effect, we need to be able to correctly identify original, human-generated data, and use its authenticity to preserve model fidelity over time. To do so will require standards and safeguards that are sadly yet to be widely adopted.

Without such safeguards, the increasing prevalence of AI-generated content could lead to a self-reinforcing cycle of degradation, where models trained on synthetic data continuously diverge from real-world distributions. The authors suggest some mitigation strategies, such as community-wide coordination to track the provenance of data and ensure models are trained on a diverse mix of genuine and synthetic content. But writing this in 2024, it is unclear how such coordinated efforts will arise.

What If We Fail?

Model collapse is a concerning phenomenon with far-reaching implications for the future of generative AI - and for the corpus of recorded human knowledge itself.

As AI-generated content becomes more pervasive, the quality and reliability of models trained on such data are at risk of significant decline. Failure to address these challenges could lead to a future where generative models lose their capacity to meaningfully interact with the complexities of human language and behaviour.

While this would make generative AI a less effective and reliable tool, I personally believe it would do little to reduce its adoption or prevalence. The end result could be that all our content sources (including books, video and the internet itself) is flooded with meaningless drivel that increasingly fails to represent the real world. I believe this is a harm too great for us to ignore.