No, this is not another hot take about how AI is going to replace software engineers. Instead, I would like to explore the idea that, over time, AI might replace software itself. Which might lead you to ask - what exactly is the distinction?

Well, as Large Language Models (LLMs) have continued to improve in the last few years, it has become something of a pressing issue in software circles to consider whether the role of the software engineer is at risk. The unreasonable effectiveness of LLMs at performing increasingly complex knowledge work, particularly in domains that are entirely or predominantly text-based, and a continuing upward trend in model capabilities, has led many to believe that it is only a matter of time before software will primarily be written not by humans, but by autonomous AI agents. The recent and admittedly awesome trend for vibe-coding is only making this feel more pressing.

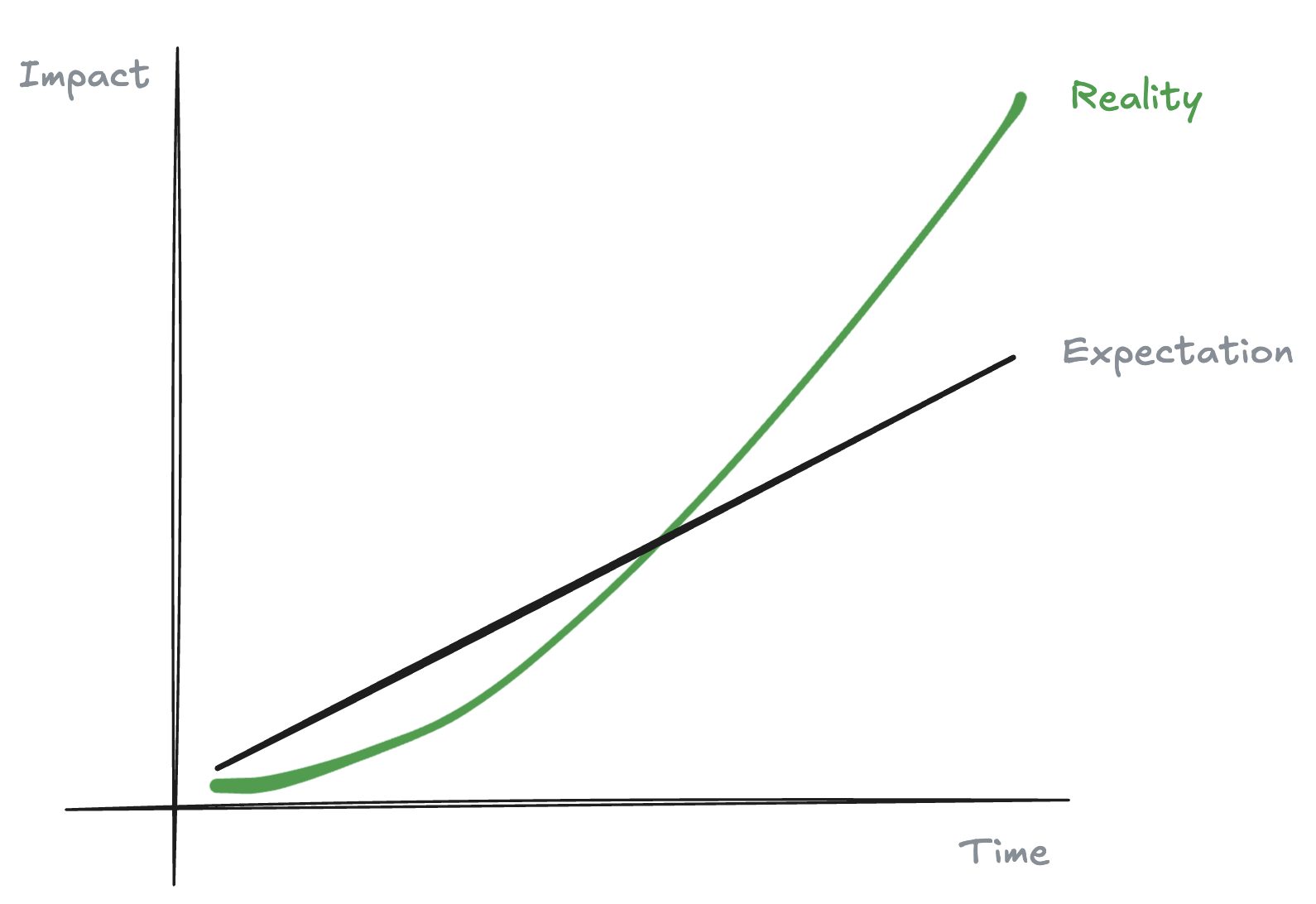

My view is that (as is often the case with fundamental technological shifts) the short term impact is perhaps being overstated, but the long term impact is likely being underestimated. Vibe-coding is great fun for quickly spinning up a toy app. But serious engineering of software that meets the many high standards we expect (for usability, speed, performance, reliability, security, data privacy etc) still requires the expertise, nuance and craft of experienced humans.

Nonetheless, if you pull at this thread a little harder, you will realise that more starts to unravel than the careers of humans like me who sit and write “code” all day. I believe that the nature of exponential change we are seeing with generative AI may very well lead to a situation in which software - i.e. code - ceases to be something that is written at all - by intelligences either human or artificial.

To understand what I mean, we’re going to have to rewind the clock and understand what we mean by code.

What is programming?

When we talk about programming now, we usually trace the meaning back to Ada Lovelace - the first person to program how a computing device should work. An evening of entertainment in Victorian London might have the following program (or "programme", to use the British English spelling that would have applied at the time):

- arrive at 7pm for the champagne reception

- a recital by a promising young pianist

- a talk by Mr Charles Darwin

- a display of dancing from the debutantes

- etc…

This is a series of individual actions, to happen in a prescribed order, forming the curated program for an evening of keeping up appearances in high society. This kind of careful sequencing of events is exactly what early programming was. For example, imagine a machine that could only load, store and add numbers, and you wanted a program that added together any numbers you gave it. It might look something like this:

1LOAD_NEW_INPUT2SAVE_TO_LOCATION_13LOAD_NEW_INPUT4SAVE_TO_LOCATION_25ADD_LOCATION_1_TO_LOCATION_26SAVE_TO_LOCATION_17NULL_LOCATION_28LOAD_NEW_INPUT9etc...

Nowadays, we might loosely refer to this kind of low-level instructional programming as “machine code” - direct operations to be carried out by the central processing unit of a machine.

For the benefit of any reader who is unfamiliar - this is adamantly not what we mean when we talk about writing software today. We are more likely to write something like this:

1def fibonacci(n: int) -> list[int]:2 """3 Returns a list of the first n Fibonacci numbers.4 @param n: The number of Fibonacci numbers to return.5 @return: A list of the first n Fibonacci numbers.6 """7 if n <= 0:8 raise ValueError("N must be greater than zero")9 elif n == 1:10 return [0]11 elif n == 2:12 return [0, 1]13 else:14 sequence = fibonacci(n-1)15 sequence.append(sequence[-1] + sequence[-2])16 return sequence

This is not a set of instructions to be carried out by a processor capable only of storage, retrieval and basic mathematical operations. It is an algorithm, specified using mathematical expressions, which relies on concepts such as variables, functions, recursion, indexing and so on, and which must be interpreted and then compiled into a sequence of machine operations to be used on a hardware processor.

What is software?

Looking at our Fibonacci function above, there are some things we can note about software:

- It is written in text, which contains some English and some mathematical language

- It has semantic and syntactic constructs that dictate how it should be interpreted

- It must be translated into machine instructions in order to actually work

This is what we usually mean by “code”, and it is several steps removed from the actual computation work that is performed. In this case, we have a function which is written in Python. When it is run, the first thing that will happen is that it will be compiled to bytecode - a simplified set of operations that starts to look a bit like our machine code from earlier.

In Python, it is easy to print out an annotated view of that bytecode:

1import dis2dis.dis(fibonacci)

Which gives the following output:

1 1 RESUME 023 7 LOAD_FAST 0 (n)4 LOAD_CONST 1 (0)5 COMPARE_OP 58 (bool(<=))6 POP_JUMP_IF_FALSE 11 (to L1)78 8 LOAD_GLOBAL 1 (ValueError + NULL)9 LOAD_CONST 2 ('N must be greater than zero')10 CALL 111 RAISE_VARARGS 11213 9 L1: LOAD_FAST 0 (n)14 LOAD_CONST 3 (1)15 COMPARE_OP 88 (bool(==))16 POP_JUMP_IF_FALSE 3 (to L2)1718 10 LOAD_CONST 1 (0)19 BUILD_LIST 120 RETURN_VALUE2122 11 L2: LOAD_FAST 0 (n)23 LOAD_CONST 4 (2)24 COMPARE_OP 88 (bool(==))25 POP_JUMP_IF_FALSE 4 (to L3)2627 12 LOAD_CONST 1 (0)28 LOAD_CONST 3 (1)29 BUILD_LIST 230 RETURN_VALUE3132 14 L3: LOAD_GLOBAL 3 (fibonacci + NULL)33 LOAD_FAST 0 (n)34 LOAD_CONST 3 (1)35 BINARY_OP 10 (-)36 CALL 137 STORE_FAST 1 (fib_sequence)3839 15 LOAD_FAST 1 (fib_sequence)40 LOAD_ATTR 5 (append + NULL|self)41 LOAD_FAST 1 (fib_sequence)42 LOAD_CONST 5 (-1)43 BINARY_SUBSCR44 LOAD_FAST 1 (fib_sequence)45 LOAD_CONST 6 (-2)46 BINARY_SUBSCR47 BINARY_OP 0 (+)48 CALL 149 POP_TOP5051 16 LOAD_FAST 1 (fib_sequence)52 RETURN_VALUE

Is this what is actually then run as the program? Well no, not quite. This is in fact an instruction set to be used by another piece of software - the Python Virtual Machine (the PVM). The PVM directly interprets and executes the bytecode instructions (opcodes like LOAD_CONST, BINARY_OP, etc.) one by one. How these instructions are executed on the physical machine then depends on more software (the operating system) and on hardware (the physical processor architecture). There are several layers further before we start actually moving electrons around on the microprocessor.

Why do we write code?

Given the massive gulf between the code that software engineers write, and the operations carried out by computer processors, we might well question why there are so many layers of abstraction. But of course if you read this far, it is obvious - the point of the code is that it is readable by the engineer. Code is an effective and comprehensible shorthand that is analogous to mathematics - allowing brevity in the expression of complexity by defining additional terms and syntax that encode meaning.

The example we have above is trivially simple, and yet the bytecode is already obscure to the point that it is near impossible to understand what the function actually does by reading it without context. Imagine the orders-of-magnitude difference for a complex application like a web browser or a payment processing system, and remember that engineers use abstraction in order to enable reasoning about large complex systems.

Software, then, is using textual language to enable two things simultaneously:

- The definition of a program of instructions to perform on hardware devices such as processors, memory storage, and networks.

- Comprehensible documentation of what that program does and how it works, in a way that enables reuse of encapsulated functionality between programs.

This is, of course, a parallel to many other languages that we use as humans - mathematics, musical notation, and of course, written and spoken language itself, in which vocabulary grows to express increasingly complex concepts in simple ways.

Do LLMs think in the same way?

Is generation of code really the best way for LLMs to use computers? LLMs are really “Large Sequence-of-Token Models”, where tokens are parts of written language syntax - words, parts of words, punctuation etc. These sequences of tokens might more broadly be called “text”, a more accurate description than “language” when describing the corpora of internet-scraped content upon which all frontier models are trained. Those corpora, of course, contain many examples of code, and it is from this that LLMs were first able to generate software based on language input. Over time, given the apparent utility of generating working code, many models have been tuned and specialised to do this job even better.

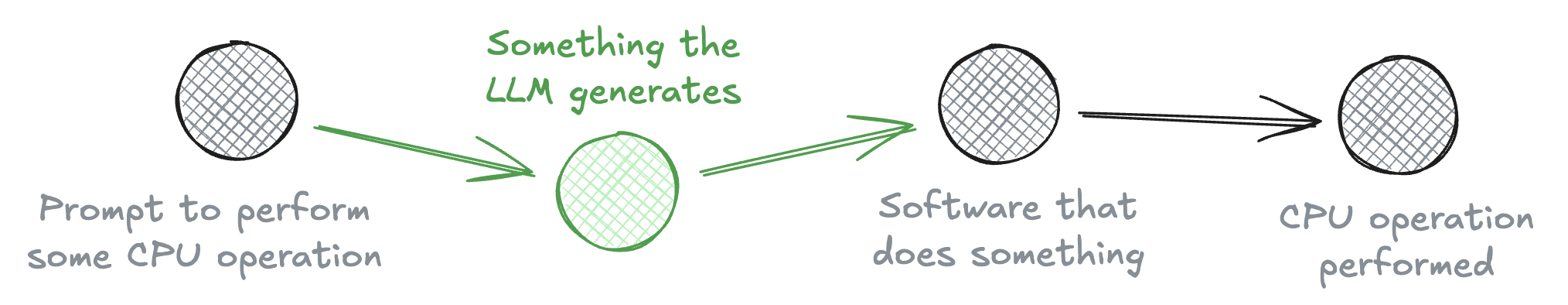

But revisiting our questions above, is generation of software really the endgame for this story? Let’s consider a scenario in which we are prompting an LLM with the intention of performing the type of task that software is typically designed to accomplish. A simple example could be: accept some input identifier, look up corresponding data from a database, perform computation on the output data, render the results as a webpage, return to the user. The current paradigm for using AI in this chain would involve using an LLM to write some code, which is then executed as traditional software:

This is a sensible state of affairs when the outputs of LLMs are slow, costly and unpredictable. But each of these three aspects are currently undergoing exponential improvement. By generating the code in advance, the software engineer who is preparing the functionality is able to verify that it works, conforms to best practices, and meets the requirements of the feature. The code can be saved, compiled, and used repeatedly and predictably. And we avoid spending compute cycles (i.e. time and money) on getting an LLM to do the same thing over and over again.

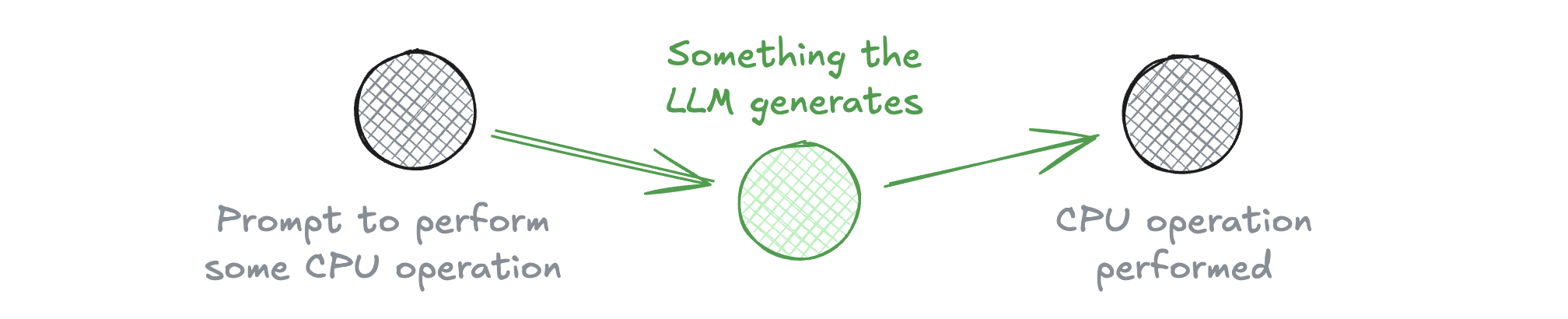

However, the bitter lesson that we learn over time may be to cede more control to the LLM, and allow it to generate the machine code directly, bypassing software entirely:

If we do this, we will have effectively ended not only the practice of writing software as a discipline, but entirely removed the need for persistent, intelligible and tweakable software. While this may sound like an unexpected paradigm shift (and frankly, expensive, unreliable and mildly stupid to attempt today), I believe there is a good chance that this will prove to be closer to a likely end state for AI.

As we move to a world where machine computation and intelligence is agentic (i.e. empowered with agency to choose the best way to impact the real world), I believe direct invocation of hardware compute may ultimately prove a more powerful and efficient model than forcing such intelligence to operate via the crutch of programming languages - a paradigm created in a world that was limited by the boundaries of human cognition. Whether that paradigm is proved obsolete by LLMs (i.e. models designed to operate well in linguistic contexts) or some future paradigm shift in machine intelligence remains to be seen.